Enter the next level of safe navigation

ON BOARD

We support all chips, all sensors and all robots.

highest embedded performance

We support all chips, all sensors and all robots.

ON BOARD

We support all chips, all sensors and all robots.

Single-Sensor Limitations Compromise Vehicle Safety and Autonomy

In today's autonomous systems landscape, GPS dependency represents a critical vulnerability that impacts operations across multiple industries.

Resources

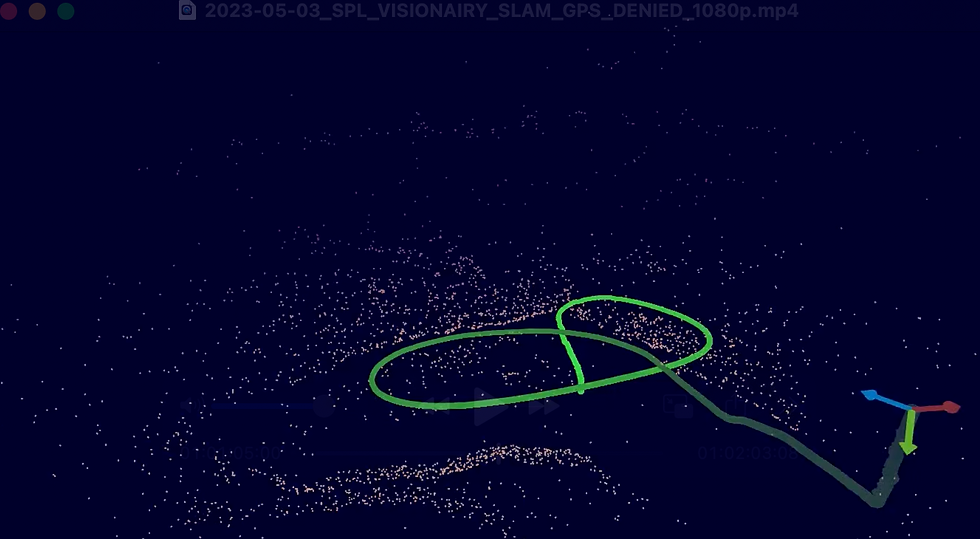

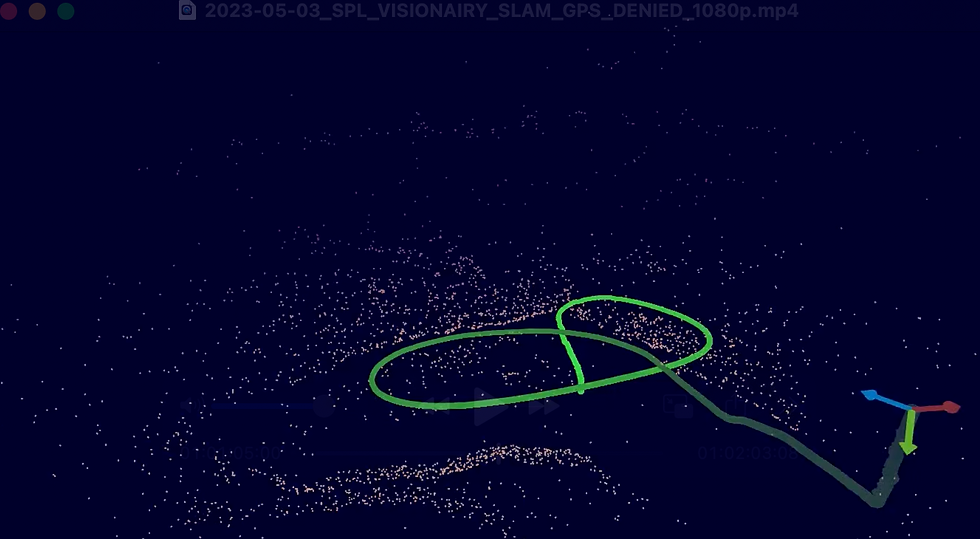

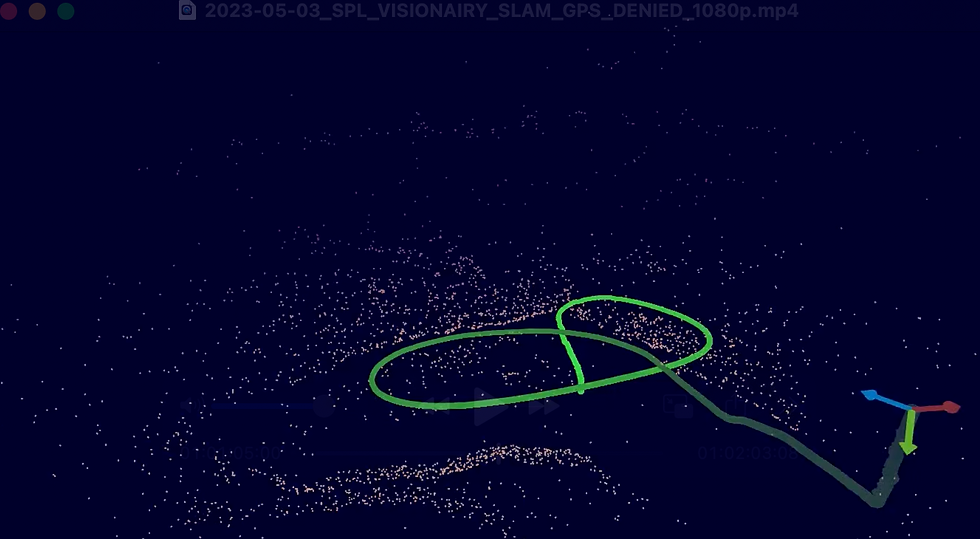

Demo Video

Whitepaper

Blog post

Solutions for Vehicles

Sensor Fusion

The Vehicle Sensing Integration Challenge – Comprehensive Perception in All Conditions

The Cost: Safety Risks, Limited Autonomy, and Development Inefficiency

These sensor integration challenges translate into significant impacts:

Safety Vulnerabilities

Single-sensor failures or limitations lead to dangerous blind spots and potential accidents.

Restricted Operational Domain

Vehicles can only operate autonomously in limited conditions where primary sensors function optimally.

Degraded Performance

Inability to leverage complementary sensor strengths results in suboptimal detection and classification.

Development Complexity

Engineering teams spend excessive resources addressing sensor-specific limitations rather than advancing vehicle capabilities.

To overcome these challenges, vehicle manufacturers and suppliers require advanced sensor fusion solutions that can:

1

Intelligently combine data from multiple sensor types to leverage their complementary strengths.

2

Maintain reliable perception across all weather, lighting, and environmental conditions.

3

Provide redundancy for safety-critical functions to eliminate single points of failure.

4

Resolve conflicting information to create a consistent environmental model.

5

Scale efficiently across different vehicle platforms and sensor configurations.

our AI solution

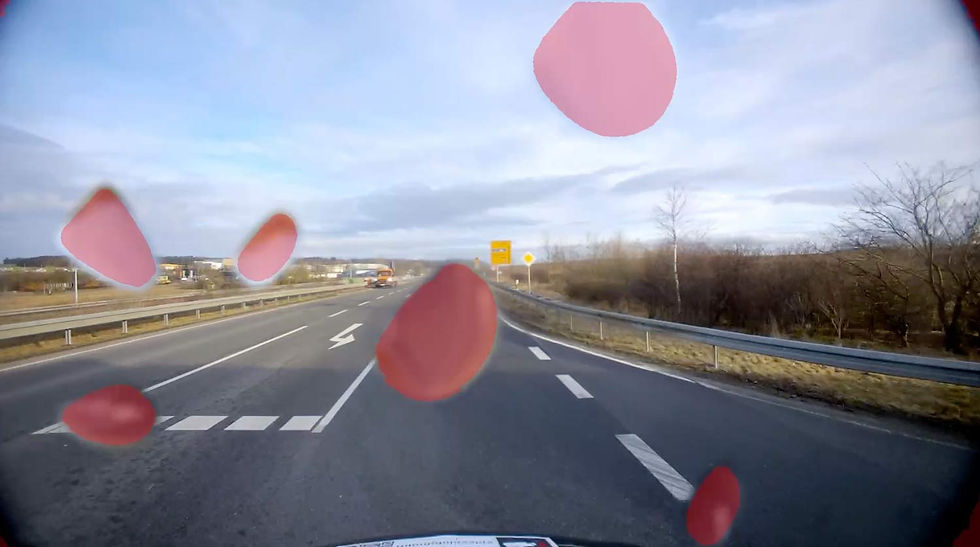

Transforming Vehicle Perception with AI-Powered Sensor Fusion

VISIONAIRY® Fusion provides vehicles with unparalleled environmental awareness through advanced multi-sensor integration.

Our system intelligently combines data from cameras, radar, LiDAR, and other sensors to create a comprehensive, consistent, and reliable perception of the environment in all conditions, establishing the foundation for truly robust driver assistance and autonomous driving systems.

At the heart of VISIONAIRY® Fusion is our cutting-edge sensor fusion architecture:

Implements both early (raw data), mid (feature), and late (object) fusion approaches to maximize information extraction.

Intelligently adjusts the influence of each sensor based on environmental conditions and confidence levels.

Leverages historical data to improve prediction and handle temporary sensor occlusions or failures.

Maintains precise alignment between sensors even as vehicles experience vibration and environmental stresses.

Explicitly represents and propagates uncertainty to enable robust decision-making.

Designed specifically for automotive-grade compute platforms, delivering high performance within strict power constraints.

Related products

VISIONAIRY™

Visual Inertial Odometry

VISIONAIRY™

MAP BASED RELOCALIZATION

VISIONAIRY™

SLAM - EO | IR

VISIONAIRY™