visionairy®

freespace | collision prevention

Freespace provides fast, vision-based obstacle detection to enable real-time collision prevention for robots across air, land, and water—without the need for active sensors.

Why Freespace | Collision Prevention?

Collision avoidance is a critical requirement for autonomous systems operating in the air, on the ground, or at sea. Freespace offers a passive, vision-based solution that leverages high-speed depth estimation to detect obstacles in real time—without relying on costly or power-intensive active sensors like LiDAR.

Unlike traditional segmentation or object detection methods, Freespace operates deterministically, making it ideal for safety-critical applications. Its optimized algorithm works seamlessly with both monocular and stereo depth inputs, providing a fast, reliable, and scalable path to robust collision prevention across platforms.

Cross-Platform Ready

Lightweight & Efficient

No Manual Labeling Needed

See how it works

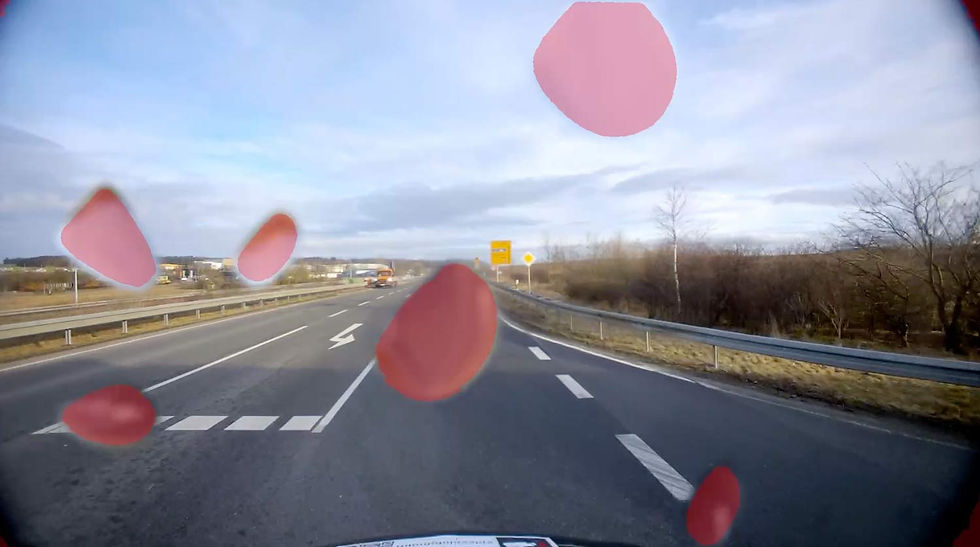

In this example, you can see that even detecting power lines is no problem for our VISIONAIRY® Collision Prevention.

Benefits

Passive Sensing

Relies exclusively on passive sensing for efficient and robust performance.

Enhanced Safety

Offers deterministic behavior, ideal for safety-relevant applications with minimal computing overhead

High Detection Accuracy

Achieves over 99% detection rate in street scenarios when combined with object detection.

Features

Freespace brings fast, reliable collision prevention to any robotic system by leveraging advanced, vision-based depth estimation.

Designed for flexibility, it operates with a wide range of camera configurations and hardware platforms—delivering safe, deterministic performance without the complexity of traditional perception stacks.

Real-Time Obstacle Detection - Rapid, frame-by-frame identification of navigable space using depth data.

Vision-Based & Passive - No need for active sensors — works purely from camera input, reducing cost and power usage.

Deterministic Behavior - Consistent, predictable performance ideal for high-safety applications.

Flexible Camera Compatibility - Can be used with any camera setup, including RGB and IR in mono or stereo configurations.

Hardware Acceleration Ready - Supports deployment on a wide range of embedded and edge devices, leveraging hardware acceleration (e.g., GPUs, NPUs).

No Semantic Labels Required - Eliminates the need for manual annotation or object classification—purely geometry-driven.

Scalable Across Platforms - Designed for use in aerial, ground, and maritime robotics systems.

These performance metrics are for demonstrative purposes only, based on configurations with proven results. Actual performance may vary by setup. Our algorithms are optimized for use with any chip, platform, or sensor. Contact us for details.

accuracy

±5% deviation depending on the used domain

update rate

Up to 100 Hz

initialization time

<10 seconds

Operating Range

Unlimited (environment-dependent)

Supported companion hardware

Nvidia Jetson, ModalAI Voxl2 / Mini, Qualcomm RB5, IMX7, IMX8, Raspberry PI

Basis-SW/OS

Linux, Docker required

Interfaces

ROS2

Input - Sensors

Any type of camera (sensor agnostic)

Input - Data

Dense depth estimation of the camera frame

Outputa - Data

Binary mask of unoccupied pixel that can be mapped to depth estimation

Minimum

Recommended

RAM

2 GB

4 GB

Storage

40 GB

60 GB

Camera

640 x 480 px, 20 FPS

1280 x 720 px, 60 FPS

The information provided reflects recommended hardware specifications based on insights gained from successful customer projects and integrations. These recommendations are not limitations, and actual requirements may vary depending on the specific configuration.

Our algorithms are compatible with any chip, platform, sensor, and individual configuration. Please contact us for further information.