visionairy®

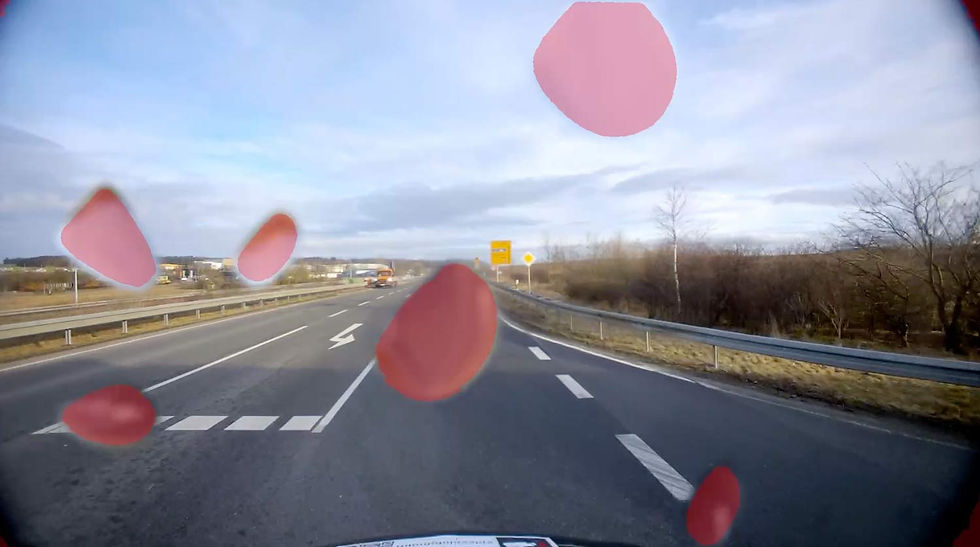

Camera Depth Estimation - IR | EO

Transform any camera into a precise depth sensor—eliminating the need for costly, sparse LiDAR systems.

Why Camera Depth Estimation?

Traditional depth sensors like LiDARs are often expensive, power-hungry, and limited in resolution.

Camera Depth Estimation offers a lightweight, scalable alternative by extracting metric depth directly from standard RGB images—making advanced 3D perception accessible across a wide range of devices and industries.

Cost-Effective

High Resolution - Generates dense, per-pixel depth maps from high-res imagery

Scalable & Versatile

See how it works

Benefits

Low Power Consumption

Ideal for mobile and embedded systems where energy efficiency matters.

Improved Safety

Enables robust perception in scenarios where LiDAR may fail, such as reflective or transparent surfaces.

Seamless Integration

Easily added to existing camera pipelines without hardware changes.

Features

Our Camera Depth Estimation technology turns any standard RGB camera into a powerful depth-sensing device.

Built on advanced deep learning models, it delivers accurate, real-time depth perception without the need for specialized hardware. Explore the features that make our solution scalable, precise, and ready for real-world deployment:

Metric Depth Output- Provides real-world, scale-aware depth (in meters), not just relative disparities.

Dense Depth Maps - Generates per-pixel depth for the entire image, enabling fine-grained 3D understanding.

Monocular or Stereo Input - Works with a single RGB camera or a stereo pair for enhanced accuracy.

Real-Time Inference - Optimized for fast performance on GPUs or edge devices for real-time applications.

Robust to Lighting & Texture - Leverages learned features to estimate depth even in low-texture or variable lighting conditions.

Generalizable Models - Trained on diverse datasets to perform well across different scenes and camera types.

These performance metrics are for demonstrative purposes only, based on configurations with proven results. Actual performance may vary by setup. Our algorithms are optimized for use with any chip, platform, or sensor. Contact us for details.

update rate

5 Hz

initialization time

<10 seconds

Operating Range

0-50m depending on the domain

Supported companion hardware

Nvidia Jetson, ModalAI Voxl2 / Mini, Qualcomm RB5, IMX7, IMX8, Raspberry PI

Basis-SW/OS

Linux, Docker required

Interfaces

ROS2

Input - Sensors

Any type of camera (sensor agnostic)

Input - Data

Camera’s video frames

Output - Data

Depth estimation for every pixel

Minimum

Recommended

RAM

2 GB

4 GB

Storage

40 GB

60 GB

Camera

640 x 480 px, 20 FPS

1280 x 720 px, 60 FPS

The information provided reflects recommended hardware specifications based on insights gained from successful customer projects and integrations. These recommendations are not limitations, and actual requirements may vary depending on the specific configuration.

Our algorithms are compatible with any chip, platform, sensor, and individual configuration. Please contact us for further information.