Basis-SW/OS

• Linux, Dockerized solution possible

• GPU acceleration supported

Interfaces

ROS1, ROS2

Input - Sensors

• Multi-Camera, Single Camera possible (sensor agnostic)

• Lidar: AEVA, Ouster

• Radar

Input - Data

• > 15 seconds recordings for each sensor

• Intrinsic & Extrinsic sensor calibrations

Output - Data

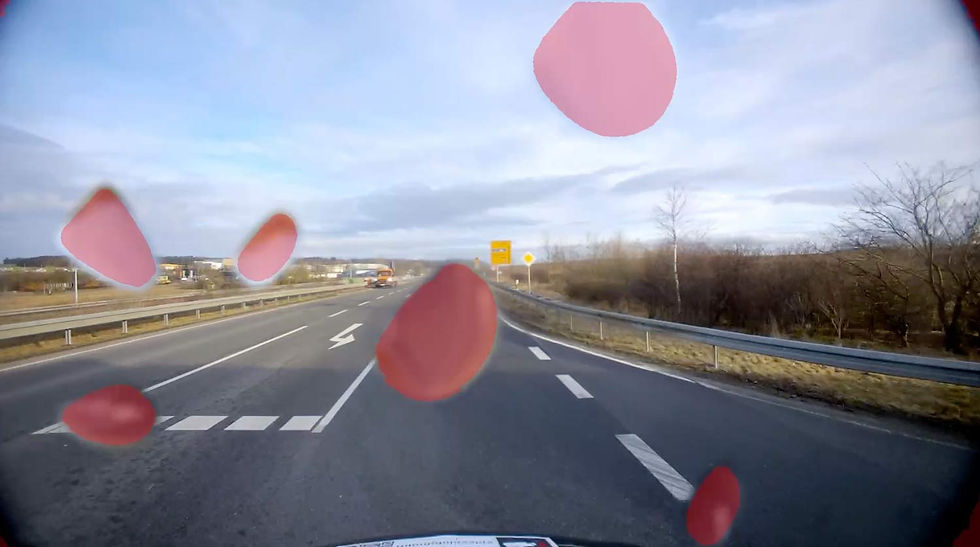

• Camera: Opaque Blockages (Pixelwise Mask) and Soiling Blockage

• Lidar: Scattering Blockage and Reflectivity Blockage

• Radar: Sparsity Blockage and Velocity Blockage

The information provided reflects recommended hardware specifications based on insights gained from successful customer projects and integrations. These recommendations are not limitations, and actual requirements may vary depending on the specific configuration.

Our algorithms are adaptable to any chip, platform, sensor, and individual configuration. Please contact us for further information.

See how it works

visionairy®

Multi-Sensor Blockage detection

Identifies and alerts when outdoor sensors become obstructed, helping prevent perception failures in autonomous vehicles by prompting sensor cleaning or halting operations in time.

Benefits

Multi-Sensor Support

Seamlessly integrates Camera, Radar, and LiDAR data for reliable blockage detection across all conditions.

All-condition performance

Supports robust blockage detection day and night using diverse sensor types.

Generalizable detection

Detects previously unseen blockages by analyzing visible degradations in the surrounding environment over time and across multiple sensor perspectives.

Features

VISIONAIRY® integrates data from multiple sensor modalities to ensure reliable operation in all environmental conditions:

Multi-Sensor Integration - Leverages sensor redundancies across Cameras, Radars, and LiDARs to accurately detect blockages under all lighting and environmental conditions.

Computer Vision at Its Core - Rather than relying on costly model fine-tuning to detect your specific blockages, our system instantly identifies anomalies across sensors with modern Computer Vision approaches.

Sensor-Focused Detection - Differentiates true sensor blockages from environmental changes by comparing overlapping sensor views.

Efficient C++ Implementation - Offers adjustable update rates to balance performance with your computational budget.

Detailed Blockage Output - Provides rich data, including pixel-level blockage masks for precise analysis.

Why Blockage Detection?

Sensors on autonomous systems are exposed to harsh outdoor environments, making them prone to blockages from dust, rain, mud, or insects. These obstructions can degrade perception algorithms and compromise safety. Spleenlab's Multi-Sensor Blockage Detection identifies such issues early, enabling timely cleaning or safe shutdowns to prevent failures.

Our system scales from single-sensor setups to large arrays of Cameras, Lidars, and Radars. By leveraging redundancy across sensors and over time, we detect various blockage types—from small opaque spots to broad translucent soiling—ensuring robust and reliable operation in real-world conditions.

Prevents perception failures by detecting sensor blockages before they impact safety

Adapts to any setup, from single sensors to complex multi-sensor arrays

Detects all types of blockages using smart fusion of sensor data over time