visionairy®

multi object detection & tracking - IR | EO

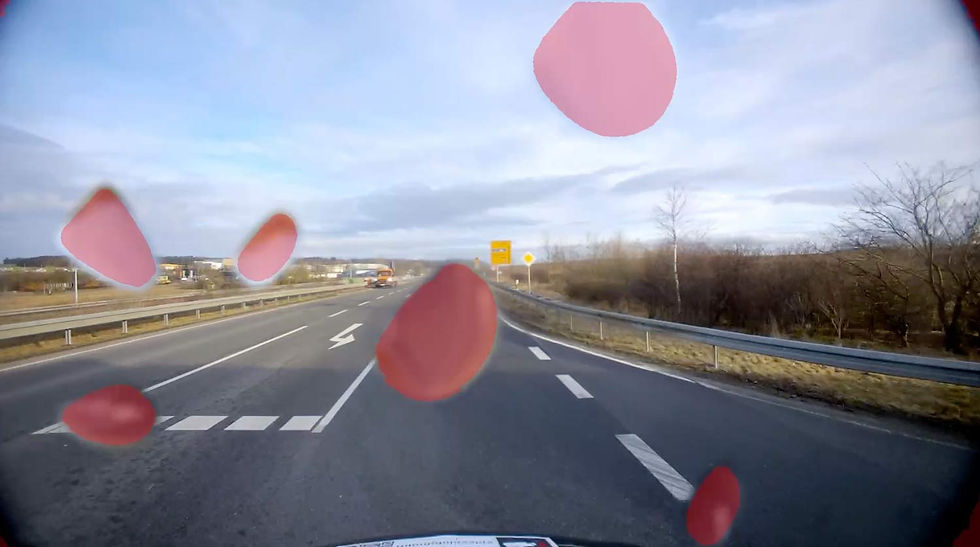

Multi-Object Detection & Tracking - IR | EO delivers reliable detection and tracking across infrared and electro-optical sensors—enabling consistent situational awareness in robotics, even under changing environmental conditions.

Why Multi Object Detection & Tracking - IR | EO?

In complex, dynamic environments, it's often not enough to track just one object—many real-world applications require monitoring multiple targets simultaneously. Multi Object Detection & Tracking - IR | EO (MOT) enables systems to detect and follow several objects across frames, maintaining unique identities over time.

This is essential for applications like traffic analysis, crowd monitoring, autonomous navigation, and retail analytics, where situational awareness depends on understanding the movement and interaction of many entities.

Our MOT solutions combine state-of-the-art detection algorithms with robust tracking models, ensuring high accuracy even in crowded scenes, occlusions, or variable lighting. With support for a wide variety of sensors and deployment platforms, our technology delivers scalable, real-time multi-object tracking performance that adapts to your specific environment and use case.

Track Multiple Targets Simultaneously

Robust in Real-World Conditions

Flexible & Scalable Deployment

See how it works

Benefits

Lightweight & Efficient

Runs smoothly on small, resource-constrained devices—ideal for edge and embedded applications.

Multimodal Support

Compatible with various sensor types, including RGB, thermal, infrared, and more.

Real-Time Performance

Delivers low-latency, high-speed tracking for time-critical use cases.

Features

VISIONAIRY® Multi Object Detection & Tracking (MOT) combines several core components—each playing a vital role in ensuring accuracy, identity consistency, and real-time performance:

Object Detection Module - Identifies and localizes all relevant objects in each frame using bounding boxes and class labels

Feature Extraction Network - Extracts visual and/or contextual features from detected objects to aid in identity matching across frames.

Data Association Algorithm - Matches detections to existing tracks using metrics like IoU, appearance similarity, or learned embeddings (e.g., Hungarian algorithm, Deep SORT, tracking-by-attention).

Tracking Module / Track Management - Maintains object identities over time, initiates new tracks, updates existing ones, and handles object disappearance (e.g., due to occlusion or leaving the scene).

Multiclass and Multimodal Support - Enables simultaneous tracking of different object classes and fuses input from multiple sensor types (e.g., RGB + LiDAR, infrared).

Real-Time Processing Engine - Ensures low-latency detection and tracking suitable for real-time applications like autonomous driving or video surveillance.

Output Interface / API - Provides standardized tracking outputs (e.g., bounding boxes, track IDs, class labels) for downstream applications via APIs or messaging protocols.

These performance metrics are for demonstrative purposes only, based on configurations with proven results. Actual performance may vary by setup. Our algorithms are optimized for use with any chip, platform, or sensor. Contact us for details.

Position accuracy

±2.5 cm in typical environments

update rate

Up to 200 Hz

initialization time

<10 seconds

Operating Range

Unlimited (environment-dependent)

Supported companion hardware

Nvidia Jetson, ModalAI Voxl2 / Mini, Qualcomm RB5, IMX7, IMX8, Raspberry PI

Supported flight controllers

PX4, APX4, Ardupilot

Basis-SW/OS

Linux, Docker required

Interfaces

ROS2 or Mavlink

Input - Sensors

Any type of sensor (sensor agnostic)

Input - Data

Camera's video frames,

Intrinsic & extrinsic sensor calibration (optional)Output

1 - Object position and consistent Track-ID

2 - Segmentation maskMinimum

Recommended

RAM

2 GB

4 GB

Storage

20 GB

50 GB

Camera

320 x 240 px, 10 FPS

1920 x 1080 px, 30 FPS

The information provided reflects recommended hardware specifications based on insights gained from successful customer projects and integrations. These recommendations are not limitations, and actual requirements may vary depending on the specific configuration.

Our algorithms are compatible with any chip, platform, sensor, and individual configuration. Please contact us for further information.