visionairy®

Follow Me Guidance

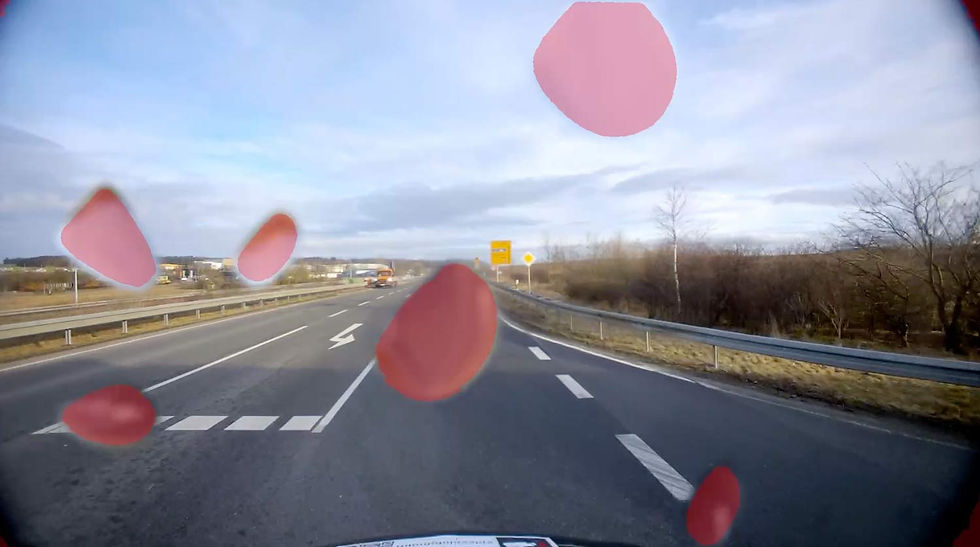

Enables drones to autonomously and intelligently track and follow moving targets in real time, ensuring smooth pursuit even in complex, changing environments.

Why Follow Me Guidance?

Follow Me Guidance gives drones the power to autonomously track people, vehicles, or objects using real-time vision—no GPS or manual control required. It outperforms traditional tracking by adapting instantly to dynamic environments, ensuring smooth, reliable pursuit in even the most challenging conditions.

With onboard vision and intelligent path adjustment, the system handles terrain changes and obstacles with ease. The result: smarter, safer, and more hands-free tracking for demanding applications like filming, inspection, or rescue.

Autonomous visual tracking

Real-time adaptation

Reliable pursuit in complex, dynamic environments

See how it works

These performance metrics are for demonstrative purposes only, based on configurations with proven results. Actual performance may vary by setup. Our algorithms are optimized for use with any chip, platform, or sensor. Contact us for details.

Update Rate

5-10 Hz

Initialization Time

<10 seconds

Maximum Target Speed

30km/h

Operating Range

Line-of-sight or sensor-limited

Latency

<100 ms

Supported companion hardware

Nvidia Jetson, ModalAI Voxl2 / Mini, Qualcomm RB5, IMX7, IMX8, Raspberry PI

Supported flight controllers

PX4, APX4, Ardupilot

Basis-SW/OS

Linux, Docker required

Interfaces

ROS2 or Mavlink

Input - Sensors

• Any type of camera (sensor agnostic)

• Any type of IMU or GPS

Input - Data

• Camera's video frames

• Aerial vehicle’s odometry

• Aerial vehicle’s current flight height

• Intrinsic & extrinsic sensor calibration

Output - Data

• Navigation of the Aerial vehicle

• Position commands for the Autopilot

• Velocity and orientation commands for the Autopilot

Minimum

Recommended

RAM

2 GB

4 GB

Storage

20 GB

50 GB

Camera

640 x 480 px, 10 FPS

1920 x 1080 px, 30 FPS

IMU

100 Hz or GPS

300 Hz or GPS

The information provided reflects recommended hardware specifications based on insights gained from successful customer projects and integrations. These recommendations are not limitations, and actual requirements may vary depending on the specific configuration.

Our algorithms are compatible with any chip, platform, sensor, and individual configuration. Please contact us for further information.

Performance Metrics

Position accuracy

±2.5 cm in typical environments

update rate

Up to 200 Hz

initialization time

<1 second

Maximum Velocity

20 m/s with full accuracy

Operating Range

Unlimited (environment-dependent)

Drift

<0.1% of distance traveled

Benefits

Lightweight and Efficient

Runs on compact, low-power hardware without compromising performance

Robust Tracking

Maintains reliable target lock even during partial occlusions

Seamless Integration

Compatible with standard Autopilot systems for easy deployment

Features

Targetless Camera-to-Camera Calibration uses visual information and motion data to achieve precise relative calibration—without requiring external targets:

Visual Detection & Tracking - Uses camera input to continuously detect and follow a designated target in real time.

Target Reacquisition - Recovers lock within seconds after occlusions or temporary loss of visual contact.

Dynamic Pathing - Adapts flight path based on target movement and relative position to maintain smooth pursuit.

Camera-Agnostic - Works with any monocular camera—no need for specialized hardware.

Seamless Autopilot Integration - Works out-of-the-box with common flight control systems.

Adaptive Speed and Distance Control - Adjusts movement based on the target's speed and distance to ensure smooth and responsive guidance.

Fail-Safe Behavior - Defines safe behavior when tracking is lost, such as stopping, returning to base, or switching to manual control.