visionairy®

Auto Multi Modal Sensor Calibration

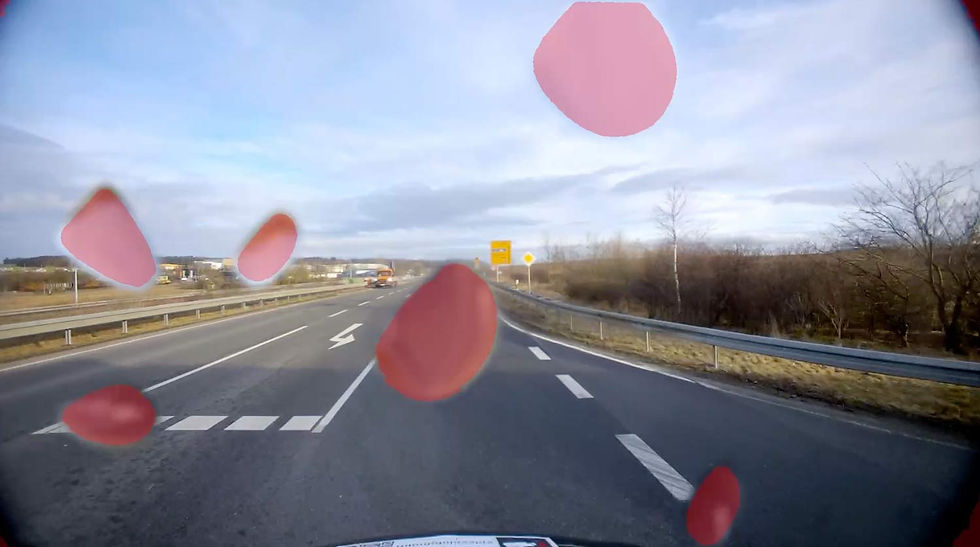

Enables real-time, targetless calibration between multi modal sensors for accurate, automated sensor fusion in dynamic environments.

Why Auto Multi Modal Sensor Calibration?

Auto multi modal sensor calibration is one of the hardest intermodality calibration problems in the field. Our algorithm uses advanced neural networks to transform the camera image into a geometric representation that can easily be calibrated intramodally to the lidar sensor.

Enabling the camera to use the lidar as the calibration target automatically, eliminating the need for engineered targets or calibration rigs.

Boosts fusion accuracy, reduces maintenance downtime

Enhances safety with continuous recalibration

Neural networks solve cross-sensor calibration

See how it works

Benefits

Target-free

Eliminates the hassle and limitations of traditional calibration setups.

Accurate & Scalable

Ideal for large-scale or dynamic deployments.

Flexible performance

Runs efficiently on both GPU and CPU platforms for easy integration.

Features

The following features highlight the core capabilities and advantages of our auto multi modal sensor calibration solution, designed for seamless integration, high accuracy, and robust performance across environments:

Automatic, Target-Free Calibration - Calibrates extrinsics between several different sensor types without requiring calibration patterns or manual setup.

Online Operation in Any Environment - Performs continuous or on-demand calibration during runtime, adapting to diverse indoor and outdoor conditions.

Universal Sensor Compatibility - Works seamlessly with any overlapping LiDAR-camera pair, regardless of model or field of view differences.

No Network Retraining Required - Deploys out-of-the-box without the need for dataset-specific neural network training or tuning.

These performance metrics are for demonstrative purposes only, based on configurations with proven results. Actual performance may vary by setup. Our algorithms are optimized for use with any chip, platform, or sensor. Contact us for details.

Position Accuracy

<0.1 degree

Update Rate

5 Hz

Initialization Time

10 seconds

Average Position Drift

2% of distance traveled

Supported companion hardware

Nvidia Jetson, Personal Computer, Cloud (AWS etc.)

Basis-SW/OS

Linux, Docker required

Interfaces

ROS2 or compiled C++ library

Input - Sensors

• Any type of camera (sensor agnostic)

• Any type of 3D LiDAR

Input - Data

• Camera's image frames (timestamped)

• LiDAR pointclouds (timestamped)

• Intrinsic camera calibration

• Initial estimate of camera-lidar extrinsic calibration

Output - Data

Optimized extrinsic calibration between the camera and lidar sensors - rotation only

Minimum

Recommended

RAM

? GB

4 GB

Storage

? GB

50 GB

Camera

640 x 480 px, 10 FPS

1920 x 1080 px, 30 FPS

LiDAR

-

3D, 10 Hz

The information provided reflects recommended hardware specifications based on insights gained from successful customer projects and integrations. These recommendations are not limitations, and actual requirements may vary depending on the specific configuration.

Our algorithms are compatible with any chip, platform, sensor, and individual configuration. Please contact us for further information.

Performance Metrics

Position accuracy

±2.5 cm in typical environments

update rate

Up to 200 Hz

initialization time

<1 second

Maximum Velocity

20 m/s with full accuracy

Operating Range

Unlimited (environment-dependent)

Drift

<0.1% of distance traveled