See how it works

visionairy®

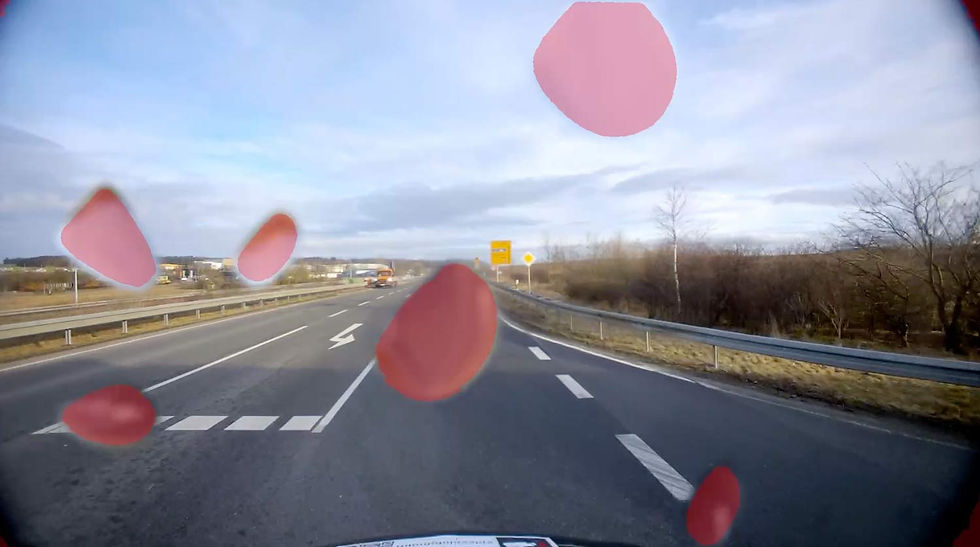

Single object tracking - iR | EO

The Single-Object Tracking IR | EO product provides robust single-object tracking across both infrared (IR) and electro-optical (EO) sensors. This technology ensures reliable selection, tracking and situational awareness, particularly crucial in robotics applications where environmental conditions may vary significantly.

These performance metrics are for demonstrative purposes only, based on configurations with proven results. Actual performance may vary by setup. Our algorithms are optimized for use with any chip, platform, or sensor. Contact us for details.

update rate

Up to 200 Hz

initialization time

<10 seconds

Operating Range

Unlimited (environment-dependent)

Supported companion hardware

Nvidia Jetson, ModalAI Voxl2 / Mini, Qualcomm RB5, IMX7, IMX8, Raspberry PI

Supported flight controllers

PX4, APX4, Ardupilot

Basis-SW/OS

Linux, Docker required

Interfaces

ROS2

Input

Sensors: Any type of camera (sensor agnostic)

Data: Camera's video frames, intrinsic & extrensic sensor calibration (optional)Output

Object position and consistent Track-ID

Minimum

Recommended

RAM

2 GB

4 GB

Storage

10 GB

50 GB

Camera

320 x 240 px, 10 FPS

1920 x 1080 px, 30 FPS

The information provided reflects recommended hardware specifications based on insights gained from successful customer projects and integrations. These recommendations are not limitations, and actual requirements may vary depending on the specific configuration.

Our algorithms are compatible with any chip, platform, sensor, and individual configuration. Please contact us for further information.

Performance Metrics

Position accuracy

±2.5 cm in typical environments

update rate

Up to 200 Hz

initialization time

<1 second

Maximum Velocity

20 m/s with full accuracy

Operating Range

Unlimited (environment-dependent)

Drift

<0.1% of distance traveled

Benefits

Edge-Ready Performance

Optimized to run efficiently on small, less powerful hardware—perfect for embedded systems, drones, and mobile devices.

Multimodal Compatibility

Supports a wide range of sensor inputs including RGB, thermal, infrared, and more—enabling robust tracking across diverse environments.

Real-Time Capable

Designed for speed and responsiveness, delivering high-accuracy tracking with minimal latency in dynamic, real-world scenarios.

Why Single Object Tracking - IR | EO?

Single Object Tracking - IR | EO (SOT) delivers the focus and efficiency needed when following one specific target matters most. It enables fast, accurate, and resource-friendly tracking—ideal for scenarios like surveillance, sports analytics, robotics, and autonomous vehicles. By concentrating on a single object, SOT minimizes computational load while maximizing precision, even in crowded or fast-changing environments.

What sets our approach apart is our unmatched versatility: our models and datasets are designed to support a wide range of camera sensors, target platforms, and real-world use cases.

Whether you're deploying on drones with thermal cameras, mobile devices, or embedded systems in vehicles, our tracking technology adapts seamlessly—making it easy to integrate into virtually any system or workflow.

High Precision, Low Overhead

Versatile Across Use Cases

Seamless Integration

Features

To deliver fast, accurate, and reliable performance, Single Object Tracking software is built from several essential components. Each part plays a critical role in ensuring the system can consistently follow a target across frames, adapt to changing conditions, and run efficiently across different hardware platforms. Below is an overview of the key building blocks that power a robust SOT solution.

Object Initialization Module - Allows the user or system to specify the target object (e.g., via bounding box or point click) in the first frame.

Feature Extraction Backbone - Neural network (e.g., CNN, Transformer) that extracts discriminative features from both the target and search regions.

Tracking Algorithm / Core Tracker - Compares the target features with subsequent frames to localize the object (e.g., Siamese network, transformer-based matcher, correlation filters).

Update Mechanism - Determines when and how to update the object appearance model over time to handle changes in scale, pose, or lighting.

Platform Optimization Layer - Ensures the software is optimized for specific deployment targets (e.g., TensorRT for NVIDIA, ONNX, ARM-friendly models).

Real-Time Processing Engine - Enables high FPS performance with low latency—critical for real-time applications like robotics or AR.

Interface & Integration API - Provides clean input/output APIs for embedding the tracker in larger systems (e.g., C++, Python, ROS, gRPC).