See how it works

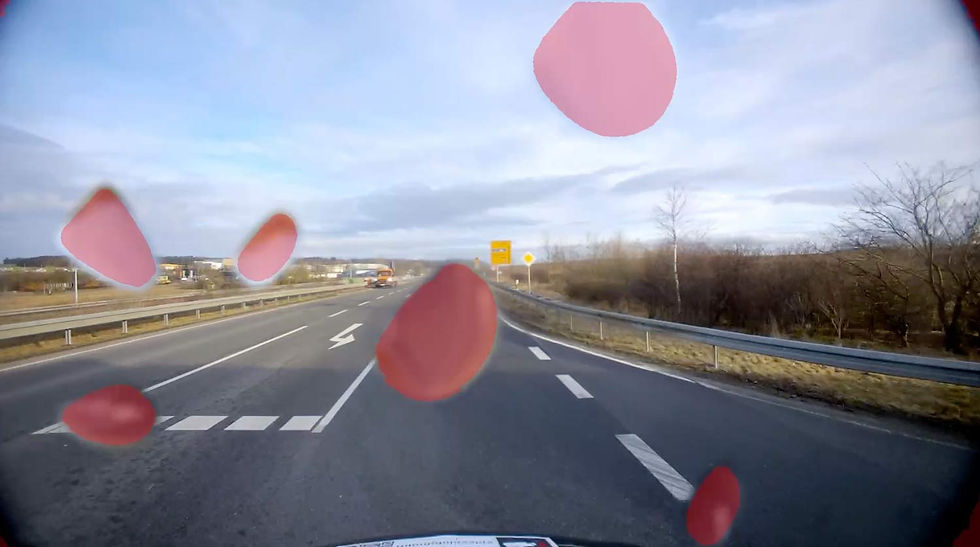

Simulation of a defense scenario for locating objects using an infrared camera

Benefits

Test Safely and Efficiently

Simulate complex scenarios without real-world risk.

Accelerate Development

Validate AI systems faster with repeatable, high-fidelity environments.

Reduce Costs

Minimize time, resources, and field testing with virtual sensor data generation.

SPLEENLAB®

Simulation

Enables realistic, high-fidelity testing of AI perception systems by simulating sensor data and environments for faster, safer development.

Features

Spleenlab’s simulation platform offers a comprehensive toolset to accelerate the development and validation of AI perception systems—featuring a range of powerful capabilities designed for realistic, controlled testing environments:

High-Fidelity Sensor Simulation - Realistic data generation for RGB cameras, thermal imaging, LiDAR, radar, and IMUs.

Dynamic 3D Environments - Simulate complex and customizable real-world scenarios, including weather, terrain, and lighting.

Edge Case Generation - Easily create rare or hazardous scenarios to test system robustness.

Sensor Fusion Validation - Test and tune AI models using multi-sensor input streams for optimal performance.

Real-Time Feedback - Monitor and debug AI behavior live during simulation runs.

Scenario Scripting & Automation - Define repeatable test cases to validate perception and decision-making systems.

Seamless AI Runtime Integration - Run the same AI stack used in deployment for accurate performance validation.

Scalable Testing Infrastructure - Run multiple scenarios in parallel to accelerate development and CI pipelines.

These performance metrics are for demonstrative purposes only, based on configurations with proven results. Actual performance may vary by setup. Our algorithms are optimized for use with any chip, platform, or sensor. Contact us for details.

Update Rate

Up to 60 Hz

Initialization Time

<1 second

Supported companion hardware

Nvidia Jetson, ModalAI Voxl2 / Mini, Qualcomm RB5, IMX7, IMX8, Raspberry PI

Basis-SW/OS

Linux, Docker required

Interfaces

ROS2

Input - Sensors

Any type of camera (sensor agnostic)

Input - Data

Camera's video frames

Output - Data

Stabilizied video frames

Stabilization vector

Minimum

Recommended

RAM

2 GB

4 GB

Storage

20 GB

50 GB

Camera

640 x 480 px, 10 FPS

1920 x 1080 px, 30 FPS

The information provided reflects recommended hardware specifications based on insights gained from successful customer projects and integrations. These recommendations are not limitations, and actual requirements may vary depending on the specific configuration.

Our algorithms are compatible with any chip, platform, sensor, and individual configuration. Please contact us for further information.

Performance Metrics

Position accuracy

±2.5 cm in typical environments

update rate

Up to 200 Hz

initialization time

<1 second

Maximum Velocity

20 m/s with full accuracy

Operating Range

Unlimited (environment-dependent)

Drift

<0.1% of distance traveled

Why Simulation?

Developing and validating AI perception systems in the real world is time-consuming, costly, and often unsafe—especially for autonomous vehicles and drones. Simulation provides a controlled, repeatable environment to test edge cases, sensor setups, and AI behavior without physical risk.

Spleenlab’s simulation tools enable faster development cycles by generating realistic sensor data and dynamic environments. This helps teams fine-tune their systems, validate performance, and accelerate deployment—all while reducing reliance on real-world testing.

Edge Case Coverage

Seamless AI Integration

Continuous Validation