visionairy®

occupancy Grids

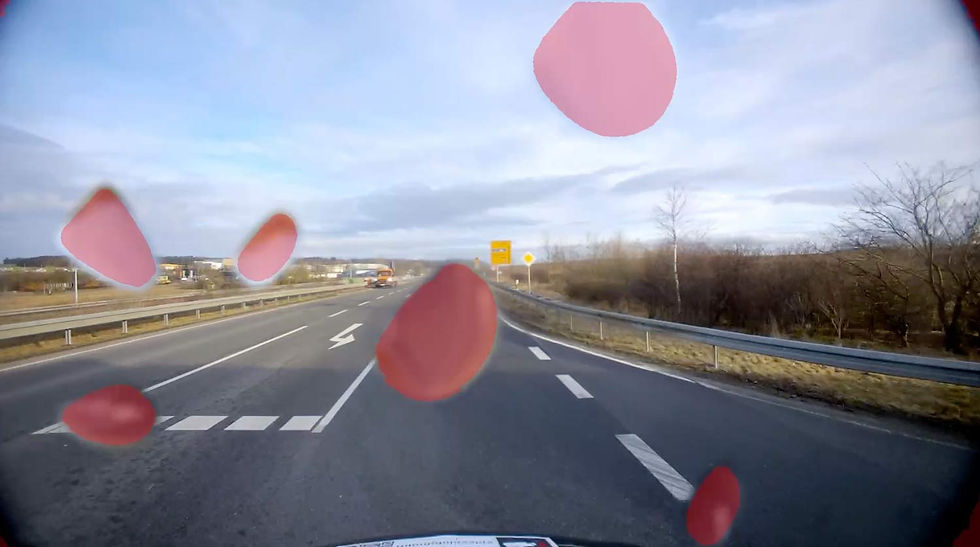

Delivers clear, real-time spatial awareness by mapping free and occupied areas, empowering autonomous systems to navigate confidently and avoid obstacles with precision.

Why Occupancy Grids?

VISIONAIRY® Occupancy Grids transform complex environments into clear, actionable maps that help autonomous systems navigate safely and avoid obstacles with ease. By instantly identifying free, occupied, and unknown spaces, they enable smarter, faster decision-making in any setting.

With real-time updates, occupancy grids keep your system agile and responsive to changing environments, ensuring reliable performance for drones, robots, and vehicles—boosting safety and efficiency wherever autonomy matters most.

Clear, actionable maps for safe and efficient navigation

Real-time updates for rapid adaptation to changes

Enhances reliability and performance in autonomous systems

See how it works

Performance Metrics

Position accuracy

±2.5 cm in typical environments

update rate

Up to 200 Hz

initialization time

<1 second

Maximum Velocity

20 m/s with full accuracy

Operating Range

Unlimited (environment-dependent)

Drift

<0.1% of distance traveled

Benefits

Improved Navigation Accuracy

Enhances path planning by precisely identifying free and occupied spaces for safer movement.

Increased Safety

Minimizes collision risks by providing clear obstacle detection in complex environments.

Scalable Integration

Easily adapts to different autonomous platforms, from drones to ground vehicles.

Features

Here are the core features that make our occupancy grid solution essential for precise, reliable autonomous navigation:

Dynamic Environment Mapping - Continuously updates the grid to reflect real-time changes in the surroundings.

Clear Space Classification - Distinguishes between free, occupied, and unknown areas for accurate navigation.

High-Resolution Grids - Provides detailed spatial information to improve obstacle detection and path planning.

Seamless Sensor Integration - Combines data from LiDAR, cameras, and other sensors for a comprehensive environment view.

Optimized for Efficiency - Designed for fast processing on embedded systems to support real-time decision-making.

These performance metrics are for demonstrative purposes only, based on configurations with proven results. Actual performance may vary by setup. Our algorithms are optimized for use with any chip, platform, or sensor. Contact us for details.

Reprojection Error

±1 px in typical environments

Runtime

30-90 min

Required Recording Length

3-5 min

Supported companion hardware

Nvidia Jetson, AMD64 device with Nvidia GPU

Supported flight controllers

PX4, APX4, Ardupilot

Basis-SW/OS

Linux, Docker required

Interfaces

ROS2 bag

Input - Sensors

Any type of camera (sensor agnostic)

Any type of IMU or GPS

Input - Data

1) Camera's video frames

2) IMU's raw measurements

3) GPS points

Output - Data

Intrinsic and extrinsic calibration in 'kalibr' format

Minimum

Recommended

RAM

16 GB

32 GB

Storage

30 GB

50 GB

Camera

640 x 480 px, 10 FPS

1920 x 1080 px, 30 FPS

IMU

100 Hz or GPS

300 Hz or GPS

The information provided reflects recommended hardware specifications based on insights gained from successful customer projects and integrations. These recommendations are not limitations, and actual requirements may vary depending on the specific configuration.

Our algorithms are compatible with any chip, platform, sensor, and individual configuration. Please contact us for further information.